Why is the Air Canada strike continuing?

How will Powell’s Jackson Hole speech affect markets?

What impact will Trump’s Intel stake have?

Why is MSNBC rebranding to MS NOW?

What caused the surge in TeraWulf stock?

How is Zelenskyy’s White House visit influencing Ukraine talks?

Why did Novo Nordisk cut Ozempic prices?

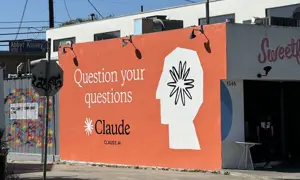

Anthropic says some Claude models can now end ‘harmful or abusive’ conversations

techcrunch.com/2025/08/16/anthropic-says-some-claude-models-can-now-end-harmful-or-abusive-conversations

Anthropic has announced new capabilities that will allow some of its newest, largest models to end conversations in what the company describes as “rare, extreme cases of persistently harmful or abusive user interactions.” Strikingly, Anthropic says it’s doing this not to protect the…

This story appeared on techcrunch.com, 2025-08-16 15:50:05.